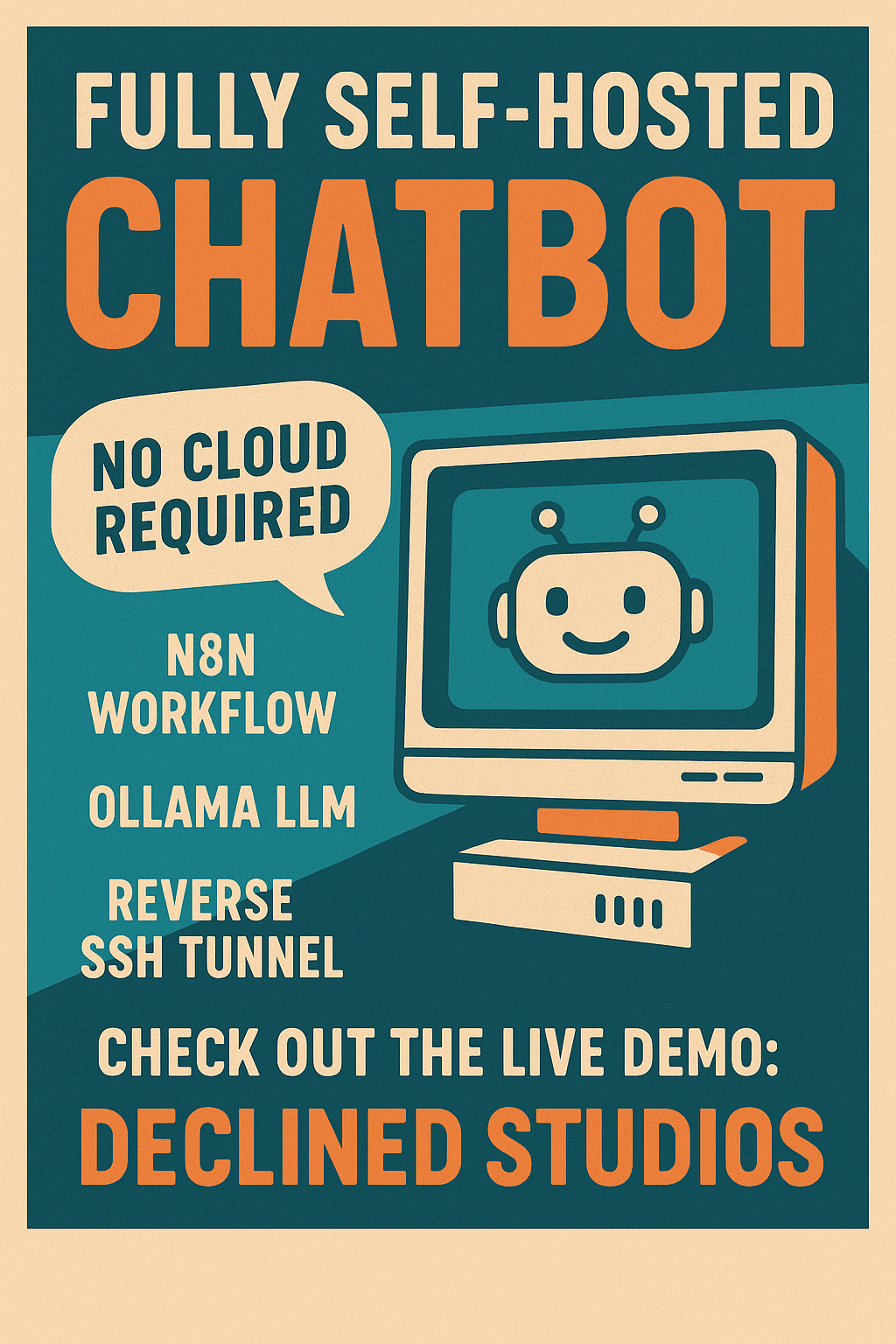

So… I built a chatbot. But not just any chatbot.

This one lives on a real, live website.

It doesn’t phone home to OpenAI, Google, or any mystery cloud server.

It’s powered entirely by my homelab, running n8n workflows, an Ollama LLM, and Qdrant for vector search.

Everything’s self-hosted. Everything’s real-time.

Oh, and it’s piped through a reverse SSH tunnel to my VPS because, well… I like things spicy.

🧠 What It Actually Does

You ask a question on the site, something real.

It hits my homelab, fires up the Ollama model (running locally), pulls relevant content using Qdrant, wraps it all up with an n8n workflow, and sends the answer right back through the tunnel to your screen.

All of that happens in seconds, and it never touches the cloud.

🔐 No Vendors, No Subscriptions, No Problem

- 💡 No OpenAI keys.

- 🔒 No data ever leaves the system.

- 🛠️ Everything is locally maintained.

- 🔧 I can scale this to any internal tool, customer support site, product knowledge base, you name it.

🤖 Want One?

If you want a chatbot on your site that actually understands your content, doesn’t leak data, and feels like magic to your users…

I’ll build you one.

Back end. Front end. Fully integrated. Internal or external.

📹 Check Out the Demo

Here’s the full demo, running on real gear:

It’s practical, private, and wildly powerful.

This little chatbot project is just one example of what’s possible when you own your tools, and your infrastructure.

You don’t need to rent intelligence from the cloud. You can own it.